In the field of data science, we are always looking for informational data. There are many ways in which data can be collected. One of the many sources is data from websites and we know all sources. For example, we have a variety of sites such as news websites (eg. CNBC), social media (eg. Twitter), e-commerce (eg. Shopee), and so on. So that we can easily collect the data. Those websites are useful and we get informational data easily from these websites. But to collect this data, we have to use the methods of web scraping, preferably with web scraping proxies when the scraping process requires sending a lot of requests to the target website.

We know the source. From where the data is to be collected. But if we collect this data manually. So it will take more of our MEN power and time. So to overcome this problem there are two libraries available in Python which are BeautifulSoup and Selenium. We can easily collect the data by using them.

Web scraping refers to the extraction or collection of web data on to a format that is more useful for the user. For example, you might scrape product information from an ecommerce website onto an excel spreadsheet. Although web scraping can be done manually, in most cases, you might be better off using an automated tool.

There are some pros and cons of web scraping or web data collection:

- Speed.

- Data extraction at scale.

- Cost-effective.

- Flexibility and systematic approach.

- Performance reliability and robustness.

- Low maintenance costs.

- Automatic delivery of structured data.

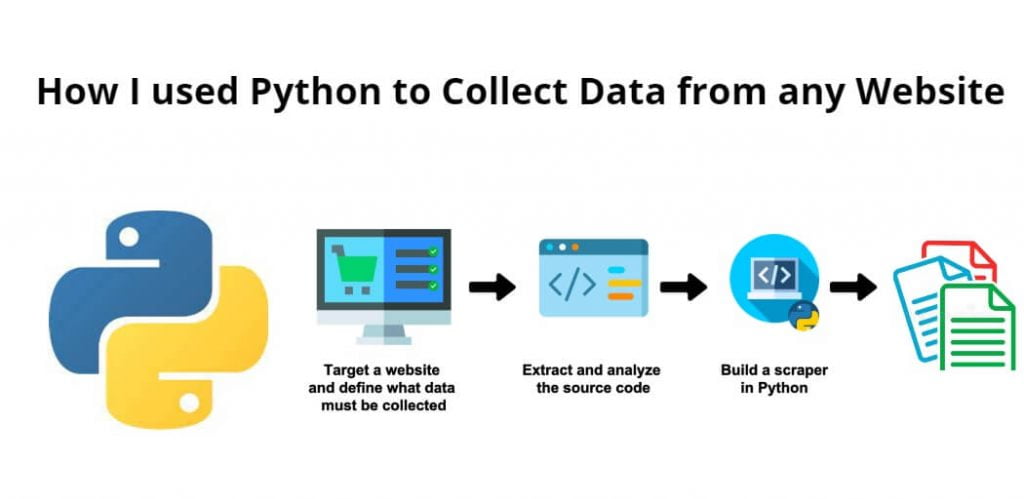

How I used Python to Collect Data from any Website

Just follow the following steps to scrape or collect web data from any website using python:

Step 1 – Install Required Python Libraries

First of all, open the terminal or command line and execute the following command into it install required python libraries, which will help to scrape or collect web data:

pip install BeautifulSoup4 pip install requests

If we are using Anaconda Prompt. So, we need to execute the following command on terminal or command line to install BeautifulSoup4 and request libraries for web data collection:

conda install BeautifulSoup4 conda install requests

Step 2 – Import Python Libraries

Once the required python libraries is installed, then import the libraries needed for BeautifulSoup:

from bs4 import BeautifulSoup as bs

import pandas as pd

pd.set_option('display.max_colwidth', 500)

import time

import requests

import random

Step 3 – Access Web Pages

Now, access the web pages for collecting web data by using BeautifulSoup; so use the following code to collect web data by it’s url:

page = requests.get("http://mysite.com/")page# <Response [200]>

This returns a response status code letting us know if the request has been successfully completed. Here we are looking for Response [200] meaning that we have successfully reached the website.

Step 4 – Parsing the Web Page

Once the page has been accessed, then use the following code to parsing the website page using BeautifulSoup:

soup = bs(page.content)soup

When executing the above code, it will return a printed text document in HTML code.

Step 5 – Navigating the Soup

Now we will need to find the exact thing we are looking for in the parsed HTML document. Let’s start by finding the title of the page.

An easy way to find what we are looking for is by:

- Visit the webpage and find the desired piece of information (in our case, the title).

- Highlight that piece of information (the title of page)

- Right-click it and select Inspect

This will bring up a new window that look like this:

The highlighted section is where we will find the title of the page we are looking for. Just click the arrow on the left of the highlighted section to see the title in the code.

Example 1 – Access the Specified Attribute Of HTML Tags

Now, we can see there are many attributes under one tag, the question is how to access only the attribute that we want. In BeautifulSoup there is a function called ‘find’ and ‘find_all’.

Now we want to search the string of h1 using find:

# Searching specific attributes of tags

soup.find(‘h1’, class_= ‘title’)

And the output will be:

Out[10]: <h1 class=”title”>How I used Python to Collect Data from any Website</h1>

From this, we can see that we are able to successfully locate and retrieve the code and text containing the title of the page we needed.

With another example, we want to find the author of the page, so we can repeat the steps mentioned before to retrieve the author names for each title of page:

authors = [i.text for i in soup.find_all(class_='author')]

Example 2 – Access Multiple Web Pages

Now that we know how to retrieve data from a specific webpage, we can move on to the data from the next set of pages. As we can see from the website, all the quotes are not stored on a single page. We must be able to navigate to different pages in the website in order to get more quotes.

Notice that the url for each new page contains a changing value:

- http://tutamke.com/page/2/

- //tutsmake.com/page/3/

- etc.

Knowing this we can create a simple list of URLs to iterate through in order to access different pages in the website:

urls=[f"//tutsmake.com/page/{i}/" for i in range(1,11)]urls

This returns a list of pages from websites.

From the list of pages, we can create another “for” loop to collect the necessary number of titles and their respective authors.

Conclusion

Through this tutorial, we have learned a bit about web scraping from this step-by-step tutorial. Even though the example we used may be quite simple, the techniques used will be applicable to many different websites on the Internet. For more complex-looking websites that require extensive user interaction, they will need another Python library called Selenium. However, most websites will only require BeautifulSoup to scrape or collect web page data. The walkthrough we’ve done here should be enough to get you started.